Consuming XCRI-CAP IV: Trainagain

This post is a summary of the 'consuming XCRI-CAP' work I did in relation to the Trainagain service, a fairly straightforward SQL Server based system that contained low thousands of short course information for use in a searchable website.

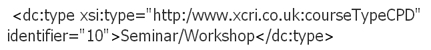

The purpose of the Trainagain database was to provide searchable data about short training events. It was not optimised for courses in the XCRI-CAP sense, though it could hold such data. Some differences included 'event'; in Trainagain this was a 'short course' offered by a provider (usually an FE college or private training provider) held at a single venue. So XCRI-CAP presentations are all 'events' for Trainagain.

Most of the courses data in the Trainagain database is held in a single table called Event. There are links to tables for Country (in the world), Area in the UK (primarily counties), Category (very limited reference data about the subject of study) and EventType (4 values: 'Course', 'Network', 'Apprenticeship', 'Consultancy'). There are other tables not relevant to this work. The Event table has a good number of fields that matched with the XCRI-CAP requirements. These included conventional items such as start and end dates, duration, venue information, title and so on, and also some larger descriptive fields such as a general summary, eligibility and outcome.

Trainagain has some mandatory requirements for data that must be included in records in the Event table. These data items are reference data, as specified in the relationships described above for Country, Area, Region, Category and EventType.

The overall approach I took was iterative, in order to determine what the practical difficulties were and their solutions - by no means production-level! Starting with one institution's data, I mapped XCRI-CAP to the Trainagain structure using Altova MapForce. This tool generates sql statements that can be used to import data into the database, showing error messages on failure. The error messages were used to formulate successive new mappings and sub-processes, until success was achieved with the first institution's data. Having recorded the mapping and process, these were then used to import the other institutions' data, revealing a small number of additional difficulties, owing to differences in the XCRI-CAP content. For the last two institutions, relatively few difficulties were encountered.

A difficulty was the requirement for pre-population of reference data on records or SQL Server would refuse to load them. Reference data included Trainagain's existing esoteric (but short) subject list. As the latter only had very limited utility, I replaced this with the LDCS classification, and each course record was necessarily classified with the LDCS codes. Other reference data imported was for geographical data related to the venues, namely Country (UK), Region (EU region) and Area (county). For EventType default reference data values were selected on import (for most XCRI-CAP data this was, unsurprisingly, 'Course'). For simplicity the geographical reference data was loaded just for the relevant areas, using a manual lookup of appropriate values. The reference data tables could be populated using whole UK data sets easily.

The main data was loaded into the database using MapForce's sql routines. Trainagain uses auto-number for Region and Area identifiers in the reference data tables, so these were mapped across to the main XCRI-CAP data using the reference data tables as lookups. Country defaults to the UK identifier.

No insuperable problems were encountered, though I did find the following issues:

1. EventIDs were integers, so XCRI-CAP internalID values could not be used, as they are strings and may contain letters; an autonumber was used instead. This would be a stumbling block for any updating mechanism using partial files, as the XCRI-CAP identifiers could not be used to uniquely identify changed records.

2. The FullPrice field has to be an integer, so, where no value was available, the value '0' had to be used, which is incorrect.

3. Similarly, PlacesAvailable required an integer value, so if not available, the value '0' was used – potentially very misleading, as the course might be shown as having no places – perhaps a better default could be implemented.

4. Description, Eligibility and Outcome fields have character limits of 1,024, so the data might be truncated (Course Data Programme data definitions have 4,000 character limits, as a contrast).

This work did not represent production level aggregation, but was a series of trials to investigate the problems of aggregating data into an existing system.

Likely generic difficulties, compared to a web-based data entry system, that could be extrapolated from the work were:

- A requirement to include appropriate mandatory reference data in the data to be imported. Whether this should be within the XCRI-CAP feed is moot; for Trainagain it must be in the final data for loading into the database, so some pre-processing is needed.

- Reference data must use a supported vocabulary or classification system. For subjects in Trainagain, this trial used LDCS, requiring extra work with the data. If data is already classified, it might be possible to use mappings between vocabularies, followed by manual checking. Otherwise manual classification from scratch, or transforms from other fields, would be needed.

- Any manual alterations should be avoided, as these changes will be over-written when a new whole data set is imported. Alternatively an internal delta update process could be implemented to ensure that only genuine changes are made.

- Consuming XCRI-CAP data requires extra work from the aggregating organisation, over and above a web-based data entry system. However, the amount of work done overall between the aggregating organisation and the producers is reduced significantly once the new data exchange methods are in place. One of the pieces of new work is a reasonable quality mapping from XCRI-CAP to the database structure, including any necessary transformations. Another is a well-designed set of data definitions set out by the consuming organisation for use by the producers. Fortunately, once these data definitions are in place, the producers can create good quality feeds, and then the mapping from XCRI-CAP to the database structure only needs to be done once for the consuming system to cover all the producers.

- The experience from this work stream has shown that importing data using XCRI-CAP feeds is a practical proposition with an existing system not optimised for XCRI-CAP. Completely automatic loading of data into a live system with no intervention is not the aim; what is needed is a robust process to gather the XCRI-CAP data, carry out any pre-loading processes, including validation, on it, and then load it, with a final check that all is correctly implemented.

Decisions about the processes required will depend on specific issues:

- Is the architecture push or pull?

- Does the existing system use reference data? If so, how much, and how can that be included on loading the new data?

- Will the import be 'whole data sets' or updates?

- How frequently will the data be refreshed?

- How much use will be made of the identifiers in XCRI-CAP and how much of other internal identifiers?

- How will differences between XCRI-CAP data definitions and local data definitions be handled, particularly with regard to size of fields and expectations of blank or null fields?

It's still my view that with robust data definitions, good quality feeds and well-designed processes, it should be straightforward to consume XCRI-CAP data. What is needed is an attention to the details of the data requirements, and how to map and transform the incoming data to meet them. It is also worth bearing in mind that course marketing data is not particularly volatile, so minute-by-minute real-time data exchange is not a requirement; in many cases monthly or quarterly regular updates are sufficient.

XCRI-CAP: turn 12 days of keying into 3 hours of checking.

Recent comment in this post Show all comments